Read a Text File With Words With Commas in Nodejs

Introduction

Comma separated values, too known as CSVs, are one of the virtually common and basic formats for storing and exchanging tabular datasets for statistical analysis tools and spreadsheet applications. Due to its popularity, it is not uncommon for governing bodies and other important organizations to share official datasets in CSV format.

Despite its simplicity, popularity, and widespread use, it is common for CSV files created using one application to display incorrectly in another. This is considering there is no official universal CSV specification to which applications must adhere. Every bit a result, several implementations exist with slight variations.

Well-nigh modern operating systems and programming languages, including JavaScript and the Node.js runtime environment, take applications and packages for reading, writing, and parsing CSV files.

In this article, we will learn how to manage CSV files in Node. Nosotros shall too highlight the slight variations in the unlike CSV implementations. Some popular packages we will look at include csv-parser, Papa Parse, and Fast-CSV.

We volition also go above and beyond to highlight the unofficial RFC 4180 technical standard, which attempts to document the format used by well-nigh CSV implementations.

What is a CSV file?

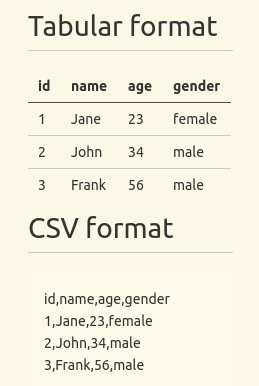

CSV files are ordinary text files comprised of information arranged in rectangular form. When yous save a tabular data gear up in CSV format, a new line graphic symbol volition split successive rows while a comma volition separate consecutive entries in a row. The image below shows a tabular information set and its corresponding CSV format.

In the higher up CSV data, the first row is fabricated upwardly of field names, though that may not e'er be the case. Therefore, it is necessary to investigate your information set before you kickoff to read and parse it.

Although the name Comma Separated Values seems to suggest that a comma should always split up subsequent entries in each record, some applications generate CSV files that employ a semicolon as delimiter instead of a comma.

Equally already mentioned, in that location is no official universal standard to which CSV implementations should attach, even though the unofficial RFC 4180 technical standard does exist. All the same, this standard came into beingness many years after the CSV format gained popularity.

How to manage CSV files in Node.js

In the previous section, we had a brief introduction to CSV files. In this section, you will acquire how to read, write, and parse CSV files in Node using both congenital-in and 3rd-party packages.

Using the fs module

The fs module is the de facto module for working with files in Node. The code beneath uses the readFile function of the fs module to read from a data.csv file:

const fs = require("fs"); fs.readFile("data.csv", "utf-eight", (err, data) => { if (err) console.log(err); else panel.log(data); }); The corresponding example beneath writes to a CSV file using the writeFile function of the fs module:

const fs = crave("fs"); const data = ` id,name,age 1,Johny,45 2,Mary,20 `; fs.writeFile("data.csv", data, "utf-8", (err) => { if (err) panel.log(err); else panel.log("Data saved"); }); If you are non familiar with reading and writing files in Node, check out my complete tutorial to reading and writing JSON files in Node.

If you lot use fs.readFile, fs.writeFile, or its synchronous counterpart like in the higher up examples, Node volition read the entire file into memory before processing information technology. With the createReadStream and createWriteStream functions of the fs module, you tin can employ streams instead to reduce retentiveness footprint and data processing time.

The instance below uses the createReadStream part to read from a information.csv file:

const fs = require("fs"); fs.createReadStream("data.csv", { encoding: "utf-eight" }) .on("data", (clamper) => { panel.log(clamper); }) .on("error", (error) => { console.log(error); }); Additionally, most of the third-political party packages we shall look at in the following subsections too use streams.

Using the csv-parser package

This is a relatively tiny tertiary-party package you can install from the npm package registry. It is capable of parsing and converting CSV files to JSON.

The lawmaking below illustrates how to read data from a CSV file and convert it to JSON using csv-parser. We are creating a readable stream using the createReadStream method of the fs module and piping it to the render value of csv-parser():

const fs = require("fs"); const csvParser = crave("csv-parser"); const effect = []; fs.createReadStream("./data.csv") .pipe(csvParser()) .on("data", (information) => { consequence.push(data); }) .on("end", () => { console.log(result); }); At that place is an optional configuration object that you can pass to csv-parser. By default, csv-parser treats the get-go row of your information prepare every bit field names(headers).

If your dataset doesn't have headers, or successive data points are non comma-delimited, y'all can pass the information using the optional configuration object. The object has additional configuration keys you can read about in the documentation.

In the example to a higher place, we read the CSV data from a file. Y'all can too fetch the data from a server using an HTTP client like Axios or Needle. The code below illustrates how to get about it:

const csvParser = require("csv-parser"); const needle = require("needle"); const result = []; const url = "https://people.sc.fsu.edu/~jburkardt/data/csv/deniro.csv"; needle .get(url) .pipe(csvParser()) .on("data", (information) => { effect.push(data); }) .on("done", (err) => { if (err) panel.log("An mistake has occurred"); else panel.log(result); }); You need to first install Needle before executing the lawmaking above. The become asking method returns a stream that you can pipe to csv-parser(). Y'all can also apply another package if Needle isn't for you.

The to a higher place examples highlight only a tiny fraction of what csv-parser can practise. As already mentioned, the implementation of one CSV certificate may be dissimilar from another. Csv-parser has congenital-in functionalities for treatment some of these differences.

Though csv-parser was created to work with Node, y'all tin utilise it in the browser with tools such as Browserify.

Using the Papa Parse packet

Papa Parse is another package for parsing CSV files in Node. Different csv-parser, which works out of the box with Node, Papa Parse was created for the browser. Therefore, it has limited functionalities if you intend to utilize it in Node.

Nosotros illustrate how to use Papa Parse to parse CSV files in the example below. As earlier, we have to utilise the createReadStream method of the fs module to create a read stream, which nosotros then pipe to the return value of papaparse.parse().

The papaparse.parse office you use for parsing takes an optional second argument. In the example below, nosotros pass the 2nd argument with the header property. If the value of the header property is true, papaparse will treat the kickoff row in our CSV file as column(field) names.

The object has other fields that you tin can look up in the documentation. Unfortunately, some properties are still limited to the browser and not yet available in Node.

const fs = require("fs"); const Papa = require("papaparse"); const results = []; const options = { header: true }; fs.createReadStream("data.csv") .pipe(Papa.parse(Papa.NODE_STREAM_INPUT, options)) .on("information", (data) => { results.push(data); }) .on("end", () => { console.log(results); }); Similarly, y'all can as well fetch the CSV dataset every bit readable streams from a remote server using an HTTP client like Axios or Needle and pipage information technology to the return value of papa-parse.parse() similar before.

In the example below, I illustrate how to utilize Needle to fetch data from a server. It is worth noting that making a network request with one of the HTTP methods like needle.get returns a readable stream:

const needle = require("needle"); const Papa = require("papaparse"); const results = []; const options = { header: true }; const csvDatasetUrl = "https://people.sc.fsu.edu/~jburkardt/data/csv/deniro.csv"; needle .get(csvDatasetUrl) .pipe(Papa.parse(Papa.NODE_STREAM_INPUT, options)) .on("information", (data) => { results.push(data); }) .on("terminate", () => { console.log(results); }); Using the Fast-CSV package

This is a flexible third-party package for parsing and formatting CSV data sets that combines @fast-csv/format and @fast-csv/parse packages into a single package. You can use @fast-csv/format and @fast-csv/parse for formatting and parsing CSV datasets, respectively.

The case beneath illustrates how to a read CSV file and parse information technology to JSON using Fast-CSV:

const fs = crave("fs"); const fastCsv = require("fast-csv"); const options = { objectMode: truthful, delimiter: ";", quote: aught, headers: true, renameHeaders: false, }; const data = []; fs.createReadStream("data.csv") .pipe(fastCsv.parse(options)) .on("error", (error) => { console.log(error); }) .on("data", (row) => { information.push(row); }) .on("cease", (rowCount) => { console.log(rowCount); panel.log(data); }); Above, we are passing the optional argument to the fast-csv.parse office. The options object is primarily for handling the variations between CSV files. If you don't pass it, csv-parser will apply the default values. For this illustration, I am using the default values for nearly options.

In almost CSV datasets, the first row contains the column headers. By default, Fast-CSV considers the first row to be a data record. You need to ready the headers choice to truthful, like in the above example, if the beginning row in your data set contains the column headers.

Similarly, equally we mentioned in the opening section, some CSV files may not be comma-delimited. You tin change the default delimiter using the delimiter option as we did in the above example.

Instead of piping the readable stream as we did in the previous example, nosotros can also laissez passer it as an argument to the parseStream part as in the example below:

const fs = require("fs"); const fastCsv = require("fast-csv"); const options = { objectMode: true, delimiter: ",", quote: nothing, headers: true, renameHeaders: false, }; const data = []; const readableStream = fs.createReadStream("data.csv"); fastCsv .parseStream(readableStream, options) .on("error", (error) => { console.log(error); }) .on("data", (row) => { information.button(row); }) .on("finish", (rowCount) => { panel.log(rowCount); panel.log(data); }); The functions above are the principal functions you can use for parsing CSV files with Fast-CSV. You can also employ the parseFile and parseString functions, but nosotros won't embrace them here. For more about them, you should to read the documentation.

Decision

The Comma Separated Values format is one of the most pop formats for data substitution. CSV datasets consist of simple text files readable to both humans and machines. Despite its popularity, there is no universal standard.

The unofficial RFC 4180 technical standard attempts to standardize the format, but some subtle differences be among the different CSV implementations. These differences exist considering the CSV format started earlier the RFC 4180 technical standard came into being. Therefore, information technology is common for CSV datasets generated by one application to display incorrectly in another application.

You can use the built-in functionalities or third-party packages for reading, writing, and parsing simple CSV datasets in Node. Nigh of the CSV packages we looked at are flexible enough to handle the subtle differences resulting from the dissimilar CSV implementations.

200's only  Monitor failed and slow network requests in production

Monitor failed and slow network requests in production

Deploying a Node-based web app or website is the easy office. Making sure your Node instance continues to serve resources to your app is where things become tougher. If you're interested in ensuring requests to the backend or third political party services are successful, try LogRocket.  https://logrocket.com/signup/

https://logrocket.com/signup/

LogRocket is like a DVR for web and mobile apps, recording literally everything that happens while a user interacts with your app. Instead of guessing why bug happen, you can aggregate and report on problematic network requests to rapidly empathise the root cause.

LogRocket instruments your app to record baseline performance timings such as page load fourth dimension, time to start byte, wearisome network requests, and also logs Redux, NgRx, and Vuex actions/land. Start monitoring for free.

Source: https://blog.logrocket.com/complete-guide-csv-files-node-js/

0 Response to "Read a Text File With Words With Commas in Nodejs"

Postar um comentário